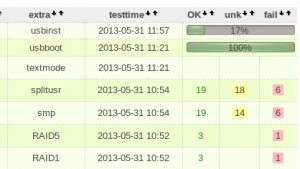

It is time for an update on openQA! Alberto Planas Domínguez discusses how to install and create tests (or “needles“) for the tool that helps keep openSUSE Factory stable.

It is time for an update on openQA! Alberto Planas Domínguez discusses how to install and create tests (or “needles“) for the tool that helps keep openSUSE Factory stable.

openQA and testing openSUSE

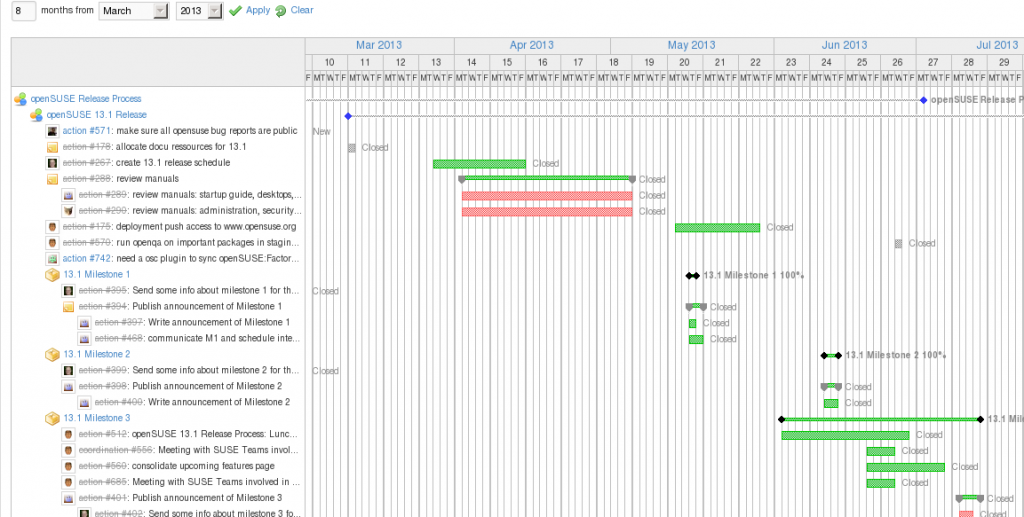

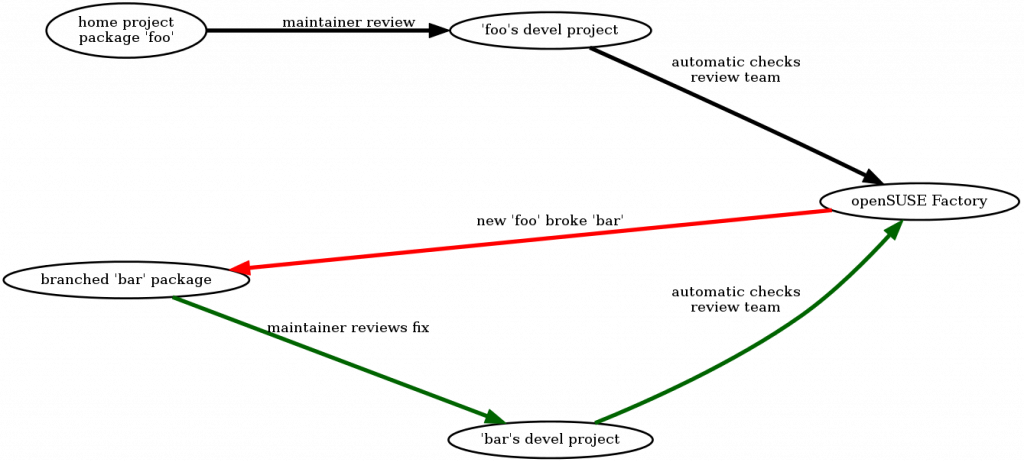

Our work on openQA was introduced a few months ago on this blog. To recap, the major improvements we made were related to the detection of failed and succeeded tests by introducing the ‘needles’ (PNG files with metadata associated in JSON format) and using openCV to determine test results; and a host of changes to speed up the testing process and improve the webUI a bit. The work is currently residing in a branch by the openSUSE team on github but the original author, Bernhard Wiedemann, plans to test, integrate and deploy openQA during hackweek.

The fact that the new code isn’t running on openqa.opensuse.org yet is unfortunate but not a huge issue. Right now, to contribute test cases, you have to install and run openQA yourself. And with the packages available for openSUSE this is not a huge deal.

So, let’s talk about using openQA and creating test cases!

Installing openQA

Installing openQA is easy. Add a repository in zypper, install some packages and run some scripts. You can find a full description of the installation process in the openQA Tutorial. In summary, you have to go through these steps:

Install the repository and the packages and reboot

% zypper ar http://download.opensuse.org/repositories/devel:openQA/openSUSE_12.3 devel:openQA

% zypper in openQA kvm OVMF

% reboot

Install needles and fix ownership

% /usr/lib/os-autoinst/tools/fetchneedles

% cd /var/lib/os-autoinst/needles

% sudo chown -R wwwrun distri

testing in progress!

After that we need to make some adjustment in the apache configuration before we can run the service. See the openQA Tutorial for the details.

Get testing

openQA testing works on the base of ISO images which we have to provide to openQA. So the next logical step is to download the last development ISO image from software.opensuse.org/developer and copy it to /var/lib/openqa/factory/iso. Now we can create the jobs (different test sets) and launch the server:

% rpc.pl --host localhost iso_new /var/lib/openqa/factory/iso/*.iso

If we have systemd running, the workers will take the jobs, create a virtual machine, and run the tests in order.

Look Ma, my first test!

Once openQA is installed we can start creating and running tests. Tests are written in Perl, and use an API exported by openQA to the applications. The internal logic of a test is something like the following:

- The test sends a set of events (keystrokes) to the virtual machine

- openQA takes screenshots of the consequences of these events

- The test compares the resulting images with a database of images (or needles, see the previous openQA article for details)

We can move through the installation process using this cycle multiple times. For example, in the test 051_installation_mode.pm we can find this:

sendkey $cmd{"next"};

waitforneedle("inst-timezone", 30) || die 'no timezone';

In the first command we send a keystroke to the system, in this case alt-n because the dictionary $cmd maps the “next” key to this keystroke. After that we wait 30 seconds and check if we find an image compatible with the “inst-timezone” needle.

Inside a test, the main function that can be used to send events is sendkey(), and sendautotype() when we want to send a full string. When we want to assert the current image we can use waitforneedle(), which launches an exception if the needle is not found, and checkneedle(), which returns the result in case that we have a match between the current image and a needle.

More complicated tests

Using this basic functionally we can build higher levels of abstraction to simplify the interaction between the test and the system. For example, we can build a function that writes the password when we execute a sudo command. One of the problems when we execute a program as a root inside a test is that the system not always asks for the root password when we call the sudo program. We can work around this as follows:

sub sudo() {

my $passwd = shift;

my $prog = shift;

sendautotype("sudo $prog\n");

if (checkneedle("sudo-passwordprompt", 2)) {

sendautotype(passwd);

sendkey "ret";

}

}

We use checkneedle() instead of waitforneedle() to make a decision inside the test instead of making an assert and marking the test as failed.

An openQA test is in fact an instance of an object. We usually inherit from a base class an overwrite one of the methods to run the test. The basic structure of a test is:

Horsies looking for needles

use base "basetest";

use strict;

use bmwqemu;

# Determine if the test can run.

sub is_applicable() {

}

# Main code of the test

sub run() {

}

# Return a map of flags to decide if the fail

# of this test is important, or to decide a

# rollback of the VM status.

sub test_flags() {

}

1;

The method is_applicable() is called to check if the test can run for a given configuration (more about this later). If the function returns true, openQA will call the run() method. It is in this function that we need to put the test code.

The test_flags() is used to decide what to do when the test fails. Depending on what this function returns, openQA can decide to mark the ISO as a wrong ISO if the test fails, or mark only this tests as failed and continue with the next test. There is one more interesting feature here. OpenQA makes snapshots of the CPU, the memory and the hard disk (using a QEMU option). If the test goes well openQA creates a snapshot labeled ‘lastgood’ that can be recovered if the next test fails. This feature is useful to guarantee that every test can be started from a stable system (and failing tests can put the system in an non stable state).

Variables

When openQA creates a new test job, what is really doing is setting a specific combination of environment variables that need to be checked by the test in order to adjust the test’s behavior or to discard itself (through the is_applicable() method).

For example, when we want to test the KDE Desktop Environment, openQA create a new variable named DESKTOP with value “KDE”. This variable can be checked in the test code using $ENV{DESKTOP}. As another example, say we want to create a test to check if Firefox is working properly on two desktops. We can use:

sub is_applicable() {

return $ENV{DESKTOP}=~/kde|gnome/;

}

If the variable DESKTOP is not KDE or GNOME, the test will not be executed.

I want to learn more

If you want to dig into openQA, you can check the source code and the different tests that are now running every day in our deployed instance. You can find the source code of V1 in Bernhard Wiederman’s repository github.com/bmwiedemann/os-autoinst and github.com/bmwiedemann/openQA, V2 is currently in the openSUSE Github account at github.com/openSUSE-Team/os-autoinst and github.com/openSUSE-Team/openQA with here the needles. As mentioned before, Bernhard plans on integrating the V1- and V2 code during hackweek.

You can grab the code to learn by checking other tests, like for example the ones in x11test.d and inst.d.

Contribute!

There is a simple way to start contributing to openSUSE through openQA:

- Install openQA

- Create a new test in x11test.d to test your application

- Send a pull request to the project with the test and the needles

Currently, these tests would then be used by the openSUSE team (running internally) to keep the quality of the upcoming release up, but once V1 and V2 are merged and deployed, you can see and act on the results on openqa.opensuse.org.

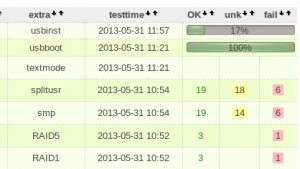

The weekly statistics

And as every week, here’s the top-10 contributors to openSUSE Factory of last week. We’re pretty much in bug fixing mode now so a big thanks to each of the contributors for helping make openSUSE 13.1 stable!

| Spot |

Name |

| 1 |

Dominique Leuenberger |

| 2 |

Denisart Benjamin |

| 3 |

Dirk Mueller |

| 4 |

Sascha Peilicke |

| 5 |

Dr. Werner Fink |

| 6 |

Marcus Meissner, Bjørn Lie |

| 7 |

Ismail Donmez |

| 8 |

Todd R |

| 9 |

Stephan Kulow, Hrvoje Senjan |

| 10 |

Niels Abspoel |