In my last post I explored the various liveCD creation methods out there, and I really wanted to try one of the others for openSUSE.

Thus I did so today in less than two hours.

I used Debian’s liveCD as basis and replaced the userspace with an openSUSE-11.4-GNOME-liveCD one (later ones likely do not work as systemd is not compatible with old 2.6.32 kernels).

And it worked like a charm. If you want to try it yourself, you need openSUSE and an empty directory with 5GB free space. Then you do as root:

zypper -n in clicfs squashfs cdrkit-cdrtools-compat

wget -O Makefile http://lsmod.de/bootcd/Makefile.aufslive.11.4

make

This will take a while to download the two isos and then at least another 3 minutes for the processing.

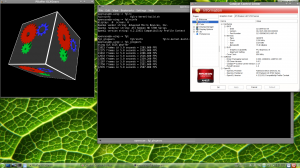

If that seems too hard for you, you can just download the finished iso and try it with qemu-kvm -m 1000 -cdrom xxx.iso

Do not let the debian logo in the bootloader confuse you. Just press enter there.

When running in KVM from RAM, this boots up in 18 seconds, while the original iso took 33 (measured from pressing enter in bootloader to the time the CPU load goes down). However, with physical media the difference will be less pronounced. Some of the difference comes from the faster gzip decompression. Unfortunately debian’s kernel does not support squashfs-xz, so I could not try that.

I hope in the future, we will have aufs patches in our normal openSUSE kernels and add an aufs-live mode to kiwi. That would help with the problems we hit with clicfs when memory runs out (and it can not be freed by deleting files either).

As far as I understood it, there is a spring in the SM-S700 cable end unit – I didn’t know there is also one in the hub itself, but I’ll check next time I have the wheel out. Until then, my dreams of perfect drop bar shifting are just that, because at 400 quid SRP the hub is a big investment. Maybe Shimano will take pity on me and make a mechanical STI…

As far as I understood it, there is a spring in the SM-S700 cable end unit – I didn’t know there is also one in the hub itself, but I’ll check next time I have the wheel out. Until then, my dreams of perfect drop bar shifting are just that, because at 400 quid SRP the hub is a big investment. Maybe Shimano will take pity on me and make a mechanical STI…