update: new image with kernel-3.6 and minimal X11/icewm http://www.zq1.de/~bernhard/linux/opensuse/raspberrypi-opensuse-20130911x.img.xz (103MB)

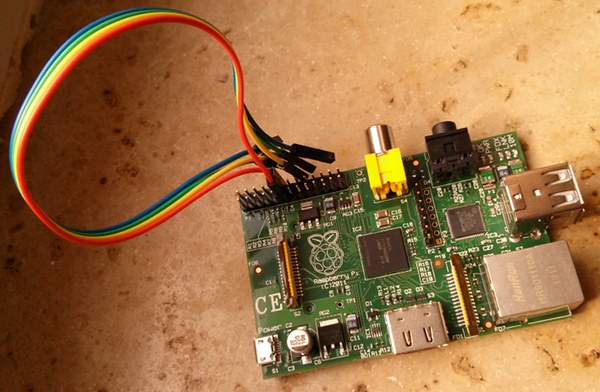

We got a new armv6 based image for the Raspberry Pi.

This one is only 82MB compressed, so pretty minimalistic.

http://www.zq1.de/~bernhard/linux/opensuse/raspberrypi-opensuse-20130907.img.xz

The exciting new thing is that this was created using an alternative image building automatism which I wrote from scratch in three hours this morning.

The scripts can be found at

https://build.opensuse.org/package/show/devel:ARM:Factory:Contrib:RaspberryPi/altimagebuild

and are also embedded within the image under /home/abuild/rpmbuild/SOURCES/

This means that everyone can now easily build his own images the way he likes and even branch and do submit requests for changes that are useful for others.

The way to use this is simple.

If you have 6GB RAM, you can speed things up with export OSC_BUILD_ROOT=/dev/shm/arm before you do

osc co devel:ARM:Factory:Contrib:RaspberryPi altimagebuild

cd devel:ARM:Factory:Contrib:RaspberryPi/altimagebuild

bash -x main.sh

This pseudo-package does not easily build within OBS or osc alone because it needs root permissions for some of the steps (chroot, mknod, mount), which could only be workarounded with User-Mode-Linux or patching osc.

The build consists of three steps that can be seen in main.sh:

- First, osc build is used to pull in required packages and setup the armv6 rootfs.

- Second, mkrootfs.sh modifies a copy of the rootfs under .root to contain all required configs

- And finally, mkimage.sh takes the .root dir and creates a .img from it that can be booted

This can build an image from scatch in three minutes. And my Raspberry Pi booted successfully with it within 55 seconds.

There are some remaining open issues:

- the repo key is initially untrusted

still uses old 3.1 kernel – solved- build scripts have no error handling

Compared to the old image, this one has some advantages:

- It is easier to resize because the root partition is the last one

- Compressed image is much smaller

- Reproducible image build, so easy to customize

- It is armv6 with floating point support, so could be faster

- We have over 5200 successfully built packages from openSUSE:Factory:ARM

so for example you can install a minimalistic graphical environment with zypper install xauth twm xorg-x11-server xinit and start it with startx

So if you wanted to play with openSUSE on RPi, you can do so right now and have a lot of fun.