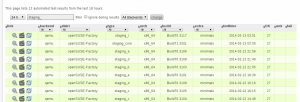

As you might have read in our previous post, our most important milestone in improving openQA is to make it possible to run the new version in public and accessible to everyone. To achieve this we need to add support for some form of authentication. This blog is about what we chose to support and why.

So what do we need?

openQA has several components that communicate with each other. The central point is the openQA server. It coordinates execution, stores the results and provides both the web interface for human beings and a REST-like API for the less-human beings. The REST API is needed for the so-called ‘workers’, which run the tests and send the results back to the server, but also for client command line utilities. Since this API can be used to schedule new tests, control job execution or upload results to the server, some kind of authentication is needed. We don’t want to write a whole article about REST authentication, but maybe some overview of the general problem could be useful to explain the chosen solution.

Authentication vs authorization

Let’s first clarify two related concepts: authentication vs authorization. Web authentication is easy when there is no API involved. You simply have a user and a password for accessing the service. You provide both to the server through your web browser so the server knows that you are the legitimate owner of the account, that’s all. That means a (hopefully different) combination of user and password for each service which is annoying and insecure. This is where openID comes into play. It allows any website to delegate authentication to a third party (an openID provider) so you can login in that website using your Google, Github or openSUSE account. In any case, it doesn’t matter if you are using a specific user, an openID user or another alternative system – authentication is always about proving to the server that you are the legitimate owner of the account so you can use it for whatever purpose in the website.

But, what about if you also provide a API meant to be consumed by applications instead of humans using a web browser? That’s where authorization comes into play. The owner of the account issues an authorization that can be used by an application to access a given subset of the information or the functionality. This authorization has nothing to do with the system used for real authentication. The application has no access to the user credentials (username or password) and is not impersonating the user in any way. It is just acting on his or her behalf for a limited subset of actions. The user can decide to revoke this authorization at any time (they usually simply expire in a certain period of time). Of course, a new problem arises: we need to verify that every request comes from a legitimately authorized entity.

Technologies we picked

We need a way to authenticate users, a way to authorize the client applications (like the workers) to act on their behalf and a way to check the validity of the authorizations. We could rely on the openSUSE login infrastructure (powered by Access Manager), but we want openQA to be usable and secure for everybody willing to install it. Without caring if he is inside or outside the openSUSE umbrella.

openID

There’s not much to say about user authentication; openID was the obvious choice. Open, highly standarized and supported and compatible with the openSUSE system and with some popular providers like Google or Github. And even more important, a great way to save ourself the work and the risk of managing user credentials.

authentication – harder

According to the level of popularity and standardization, the equivalent of openID for authorization would be oAuth, but a closer look into it revealed some drawbacks, complexity being the first. oAuth is also not free of controversy, resulting in three alternative specifications: 1.0, 1.0a and 2.0. Despite what the numbers suggest, different specifications are not just versions of the same thing, but different approaches to the problem that have their own supporters and detractors. Using oAuth would mean enabling the openQA server to be able to act as an oAuth provider and the different client applications to act as oAuth consumers. Aside from the complexity and various versions of the standard, one of the steps of the oAuth authorization flow (used for adding a new script or tool) is redirecting the user to the oAuth provider web page in order for him/her to explicitly authorize the application. Which means that a browser is needed, not nice at all when the consumer is a command line tool (usually executed remotely). We want to keep it simple and we don’t want to run a browser to configure the workers or any other client tool. Let’s look for less popular alternatives.

There are two sides to the authorization coin: identifying who is sending the request (and hence the privileges) and verifying that the request is legitimate and the server is not being cheated about that identity. The first part is usually achieved with an API key, a useful solution for open APIs in order to keep service volume under control, but not enough for a full authorization system. To achieve the second part, there is a strategy which is both easy to implement and safe enough for most cases: HMAC with a “shared secret”.

In short, every authorization includes two automatically generated hash keys, the already mentioned API key and an additional private one which is only known to the server and to the application using that authorization. In each request, several headers are added: at least one with the timestamp of the request, another with the API key and a third one which is the result of applying an encryption algorithm (such as SHA-1) to the request itself (including the timestamp and the parameters) using the private hash as salt. In the server, queries with old timestamps are discarded. For fresh requests, the API key header is used to determine the associated private key and the same encryption algorithm is applied to the query, checking the result against the corresponding header to verify both the integrity of the request and the legitimacy of the sender.

We decided to go for this as it is both simple and secure enough for our use case. Of course, the private key still needs to be stored both server and client side, but it’s not the users’ password. It’s just a random shared secret with no relation to the real user credentials or identity. It also can be revoked at any point and can only be used for actions like upload a test result or cancel a job. Secure enough for a system like openQA, with no personal information stored and no critical functionality exposed.

Conclusion

So, we’ve implemented openID and are working on a lightweight HMAC based authorization system. Next week we’ll update you on the other things we are working on, including our staging projects progress!

This week, Michal and Tomáš write about their visit of the Installfest 2014 in Prague.

This week, Michal and Tomáš write about their visit of the Installfest 2014 in Prague.